Open AI Leadership Change, and more

In this post you will read about our new CTO, LLMs in production, Open AI news drop on Friday, and what it takes to keep going at a startup!

In my substack, deep random thoughts, I share a randomly selected set of my writing and updates every week. My posts will be related to LLMs (AI in general), product dev and UX, health (founders’ flavor), startup related topics, and of course the events we run!

Asks and Announcements

One Day Workshop on LLM Evaluation - add to your calendar

Last week at Aggregate Intellect!

The big news of the week was onboarding Osh, an mentor and friend, as the CTO of aggregate intellect. Osh has been part of our journey in one shape or another since the very beginning of our company’s life. I have always looked up to him and have an enormous amount of respect for what he’s done in his career, how he thinks, and the ambitions he has. I am excited to go through our next chapter with him and accelerate our engineering work by tapping into his expertise!

This past week I also went to SaaS North conference! It was an interesting experience to be at a SaaS focused event. There were many talks that I admittedly didn’t see (my argument was that I was there to meet people, not to listen to them! because I can do that more efficiently with the infinite list of podcasts I need to catch up with).

I found a handful of good partnership leads and we will have to see where they will go. It was fascinating to learn about everyone’s journeys and ambitions. From Raj who was a cybersecurity service provider and had found his niche with a software “automating himself out of the loop”, to Andrew who worked at University IT and founded an interesting software company for streamlining uni work, to Amanda who created a learning design system focused on inclusivity, and many more.

Back in the BAU land, we did make some good progress on some of the deals we are working on, and are getting closer to our first commercial product deal! Exciting times!

Last week’s unsung hero

I was not supposed to go to SaaS North until a few days before the event when Sadegh offered me the extra ticket he had, and also took care of the logistics of getting there and where to stay and all.

Thank you, Sadegh, for being a generous friend.

LLM Stuff

in our recent LLM workshop, Abhimanyu Anand discussed the challenges and solutions in deploying language models. The economic potential of LLMs is significant, but concerns about cost, security, and safety persist. Abhi highlighted the risks associated with inefficient integrations of LLMs into production applications, using examples like Google's chatbot Bard and Microsoft's ChatGPT in Bing. Moving from prototype to production is a challenge due to uncertainties in mitigating risks. Abhi defined a production environment as having a direct or indirect impact on users and operating at scale. Open-source solutions are preferred for transparency and control, but they come with their own challenges.

Topics:

---------

⃝ Challenges of Using LLMs in Production Environments and Effective Solutions

* Training a model from scratch is expensive, so fine-tuning commercial or open-source models is a common approach.

* Identifying the right fine-tuning technique and hardware can optimize cost and time.

* Model optimization techniques like quantization and continuous patching can enhance performance.

* By addressing these challenges effectively, companies can harness the economic potential of LLMs while mitigating risks.

⃝ Challenges and Solutions in Deploying Language Models

* Consider hardware and software frameworks to optimize performance.

* Integrate security and regulation measures into the entire application.

* Create comprehensive security policies.

* Address trust, safety, and biases by prioritizing security, adhering to regulations, and filtering and evaluating datasets.

⃝ Trust, Safety, and Biases in Language Models

* Biases in LLMs can influence decision-making processes.

* Toxicity and misinformation in LLMs can harm conversations and spread false information.

* Solutions include understanding use cases, evaluating biases and safety issues, and using tools like the HuggingFace Evaluate library.

* Techniques like RLHF and fine-tuning can reduce biases and safety issues.

* Monitoring and applying security measures are crucial.

* Specialized models can be used in specific domains to address biases and hallucination.

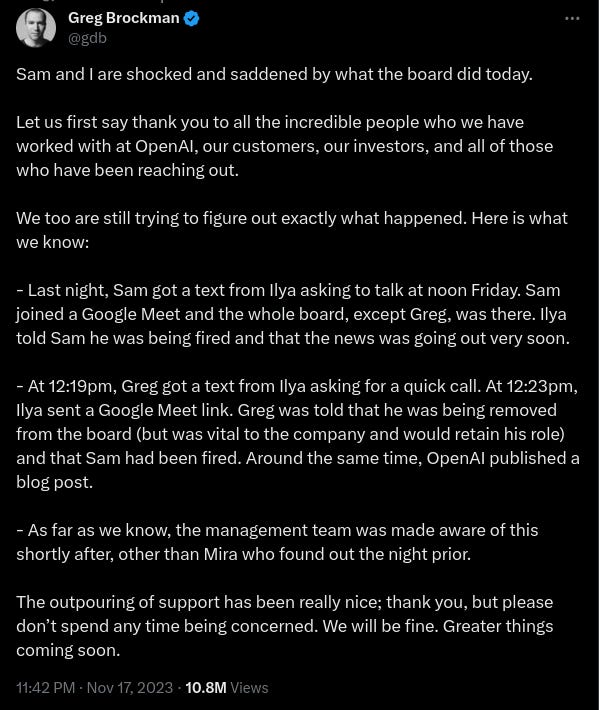

What is happening at Open AI?

In a statement, the board of directors said: “OpenAI was deliberately structured to advance our mission: to ensure that artificial general intelligence benefits all humanity. The board remains fully committed to serving this mission. We are grateful for Sam’s many contributions to the founding and growth of OpenAI. At the same time, we believe new leadership is necessary as we move forward. As the leader of the company’s research, product, and safety functions, Mira is exceptionally qualified to step into the role of interim CEO. We have the utmost confidence in her ability to lead OpenAI during this transition period.”

I don’t know what is going on, but this is a reality show for us nerds!

Podcasts

Here are the good podcasts I listened to this week:

What It’s Really Like to Live in Space With Anousheh Ansari | EP#42 Moonshots and Mindsets

The Scary New Research On Sugar & How They Made You Addicted To It! Jessie Inchauspé | E243

Book Me