Levels of Autonomy in Multi-agent LLM Systems

I wonder what the minimum level of necessary infrastructure change is for us to start seeing the beginning of Level 5 autonomous AI systems!? 🤔

We have been hearing more and more about multi-agent LLM systems and how they are the next big thing. But how far are we from this next big thing? The answer is less trivial than some social media influencers lead us to believe!

For context, when I talk about a multi-agent LLM system, I am referring to is a system that leverages a large language model (or another type of reasoning / coordination system) as an orchestration layer sitting on top of a few agents (LLMs or other types of models) with the objective of collaborating to achieve a certain goal. The early examples of this came out nearly a year ago, eg. AutoGPT, where a few powerful LLM instances collaborated to write software with particular characteristics. Creating software turned out to be a particularly attractive sandbox because testing if software works or not is easier than evaluating the quality of most other complex tasks humans do.

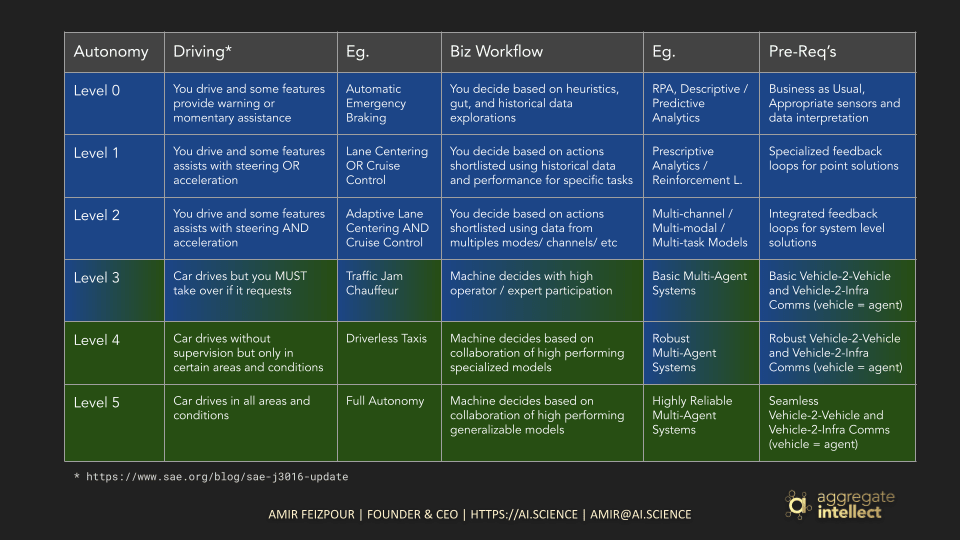

Very early on we started noticing that these types of systems, while cute on the surface, had significant stability problems and could easily fall into execution loops or repeated actions. This quickly reminded me of another area where we have been seeking autonomous machines for years (decades?): driving from A to B. So, I did a side by side comparison in one of the presentations I gave last year.

While it’s still too early to delegate judgment completely to machines, we have been seeing an increasing trend of being able to do that in both autonomous driving and applications of AI to business workflows. Since autonomous driving has been around for longer, people have gotten around the hype and have sat down and carefully imagined how this might pan out and what’s necessary to get to full autonomy. So, why don’t we get inspiration from that?

I am not going to repeat the information from the graphics above, but the short of it is that I think we are at Level 3 of autonomy with multi-agent LLM systems where “the machine decides with a high level of participation from an expert / operator”. Why do I think we are here? Well, as one might see in cases of AutoGPT and BabyAGI, the stability was nowhere close to being acceptable to be used in any sort of serious business workflow. To make it more complex, and this is still true, how to measure if the system is moving in the right direction or not iwas highly non-trivial. In autonomous driving, we use a large number of sensors and cameras to tell the machine where it is and what’s around it. In autonomous business systems, the equivalent of sensors and cameras could be drastically different from use case to use case. Therefore, from my point of view, the only robust way to leverage them today is to have a human highly involved in the operation.

So, what do we need to get to Level 4 and Level 5? Great question! And the autonomous driving crowd have done the hard work of figuring that out and has made it available to us for inspiration:

Level 4, in autonomous driving, requires robust vehicle-to-vehicle (V2V) and vehicle-to-infrastructure (V2I) communication, and of course eventually vehicle to everythings (V2X). This means that in order for the vehicle to operate safely and efficiently, they need to “talk” to each other and their surroundings. This allows them to share real-time information, creating a broader picture of the environment. Cars can anticipate actions and optimize traffic flow through V2V, while V2I communication with traffic lights and signs helps them adapt to changing conditions and improve efficiency. The robustness of this communication, meaning its speed, coverage, and security, is critical for reliable and safe operation of Level 4 self-driving cars.

Well, here is our blueprint: we need to create systems with robust communication and coordination frameworks amongst all available agents, meaning their speed, coverage, and security. And of course, that cannot be achieved before we figure out how to, with very high accuracy, tell each individual agent how far off their actions are, how their errors compound, and what they need to do to course correct.

Now the interesting thing about Level 5 is that it is fairly unlikely to happen without significant infrastructure rehaul. In other words, being able to have seamless V2X type of communication and coordination necessary for complete removal of human intervention puts a very high bar on the quality and robustness of the infrastructure. This means that we might have to completely rethink our roads, signs and signals, traffic rules, insurance policies, and many other things.

This can give us a glimpse into autonomous AI systems at Level 5 as well: we most probably have to completely rethink our corporate structures, business processes and workflows, business models, capital allocation, insurance policies, governance and many more things. In other words, just the same way that Level 5 autonomous driving is unlikely to take off within the currently available infrastructure, I wonder what the minimum level of necessary infrastructure change is for us to start seeing the beginning of Level 5 autonomous AI systems!? 🤔