Instruction Fine-tuning for LLMs

In this edition you will read about LLMs and work, Sherpa and KnowledgeOps, Sense of Purpose for founders, and Scrolling UX, Avoidance and mental health

I set up this substack a few months ago but haven’t managed to get into a regular publishing cadence! So, here is my attempt at being more regular!

In the spirit of the name of the substack, deep random thoughts, I will be sharing with you a randomly selected set of my writing every week. My posts will be related to LLMs (AI in general), product dev and UX, health (founders’ flavor), startup related topics, and of course the events we run!

If you want to hear about anything else from me, just hit reply and let me know! (or comment on this if you’re reading it on the web). So, let’s start…

LLM Stuff

At our journal club this week, we did take a look at instruction fine tuning and how it can impact the performance of LLM systems. It was a lively discussion led by

. Interestingly he sees this area as the next big battle field between all the players in LLM space where those who get to use this kind of tech on vertical data or the ones that provide the platform level capability enabling this will have an upper hand. Well, at least that’s my impression of his stance!

Another thing I wrote about this week was a recap from our recent LLM workshop where I provided an update on Sherpa, a tool that aims to revolutionize knowledge management by creating an oracle that collaborates with humans.

Sherpa acts as an oracle that coordinates and orchestrates knowledge-intensive work, facilitating collaboration and enhancing efficiency and effectiveness.

Topics:

-------

๏ Sherpa

* Sherpa is a tool that aims to revolutionize knowledge management

* Sherpa acts as an oracle that collaborates with humans

* Sherpa facilitates collaboration and enhances efficiency and effectiveness

๏ Utilizing Resources for KnowledgeOps

* Knowledge management involves utilizing externalized knowledge sources as well as tacit knowledge of experts

* The goal is to leverage these resources effectively to achieve better outcomes

* Close collaboration between humans and machines is required for optimal results

* Large language models possess language skills, formal language abilities, and (basic) reasoning skills

๏ Capabilities and Functionalities of Sherpa

* Sherpa aggregates information from various sources and provides connectors to different tools

* Sherpa assists and coordinates a group of people in completing complex tasks

* Sherpa is an open-source project with potential for commercial partnerships

๏ Challenges and Applications of Sherpa

* Challenges that are being addressed include robustness, evaluation, and creating evaluation sets

* Potential applications of Sherpa include research intensive workflows like writing sales proposals, research reports, and other complex business documents

* Discussions include integration possibilities, data sources, user experience, multimodal capabilities, and managing context and memory

Startup Stuff

I had a chat with Garrett Townsend about a range of things, from AI community, LLMs, to founders' mental health:

I talked about all things that's going on in AISC community

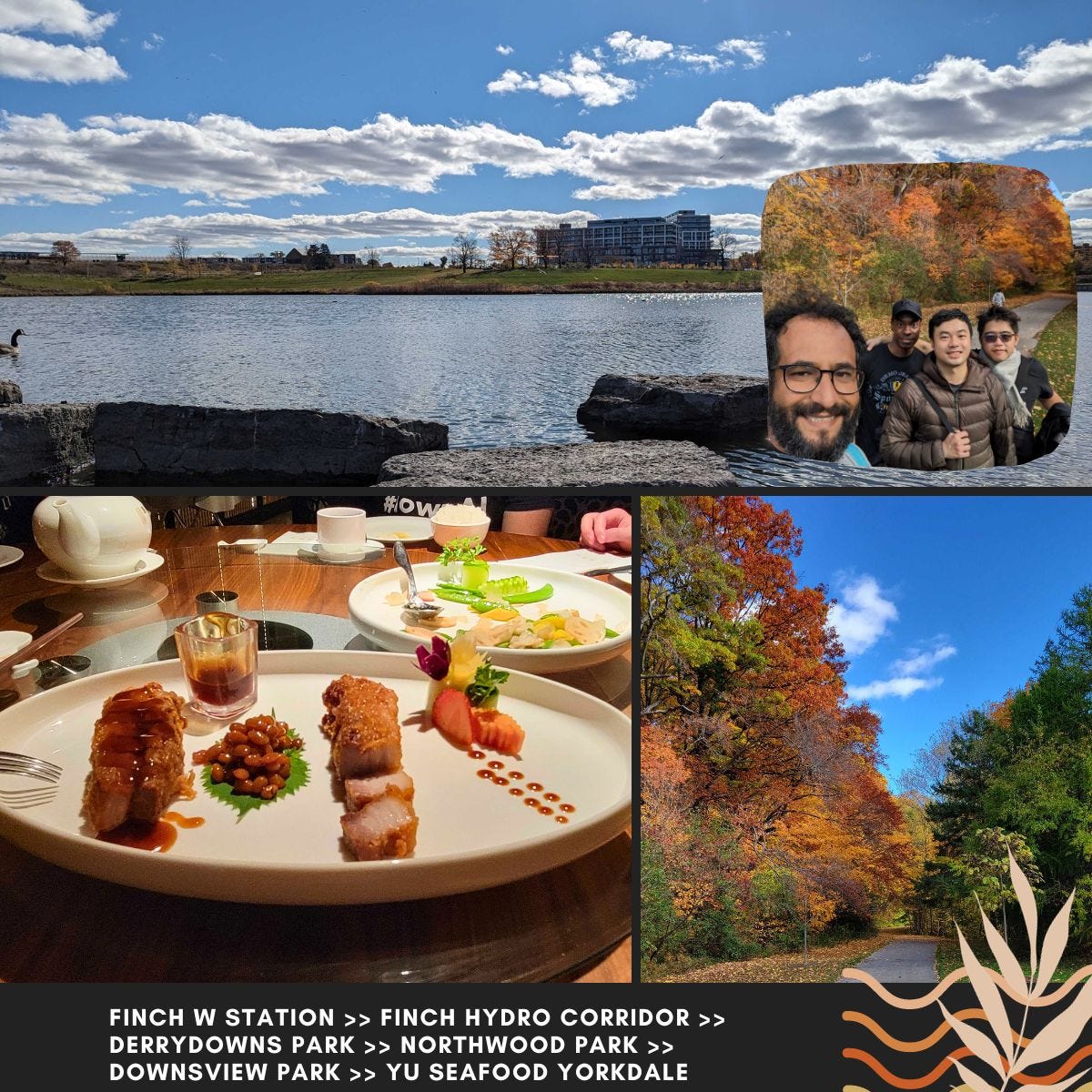

Then I talked about how pandemic lockdown led to starting the founders' hikes to help with our mental health

I talked about how my transition from academia to industry was the beginning of my journey to get machines to read all the documents and tell me what to pay attention

I talked about how what we are building is like level 2-3 autonomous driving with near term industry use cases in mind

I talked about how these kinds of systems can facilitate human-human communication and collaboration, and that's the most exciting use case for me

Watch the recording here

Hit reply and let me know what you think about this format.

There's pretty solid research on human cognitive architecture by Carnegie mellon http://act-r.psy.cmu.edu/ I'm curious if we're at the point where llm can help implement it. It involves things like continiusbthough stream, meta cognition, reflection abstraction and so on.