Do you think tools like ChatGPT help or hurt productivity?

In discussions about ChatGPT, I have been maintaining the stance that LLMs are language models and that’s what they are good at! AND the onus of what kind of product you build with them and what kind of guardrails you envision, is on the product designer.

As revolutionary as ChatGPT has been in demonstrating what LLMs bring to the table, and as smart as the choice of a chat interface to display that is, my biggest criticism has been the fact that it puts the onus of verifying the information it provides on the user:

“Free Research Preview. ChatGPT may produce inaccurate information about people, places, or facts.”

Not sure if you have noticed this in the footnote of it.

So, why am I ranting about this?

A research published by BCG concludes:

Around 90% of participants improved their performance when using GenAI for creative ideation.

Performance dropped by 23% when working on business problem solving, a task outside the tool’s current competence, by taking GPT-4's misleading output at face value.

Adopting generative AI is a massive change management effort.

I do agree that change management is an important step, but I still maintain that better product design will be a significant part of the story too. Imagine all the expensive “cultural work” that companies don’t have to do because the best practices are already built into the product and platforms they use!

There will be a need for change management, cultural adaptation, and training, but if it is not done with an eye on how the tools need to evolve to make those easier it would be a missed opportunity.

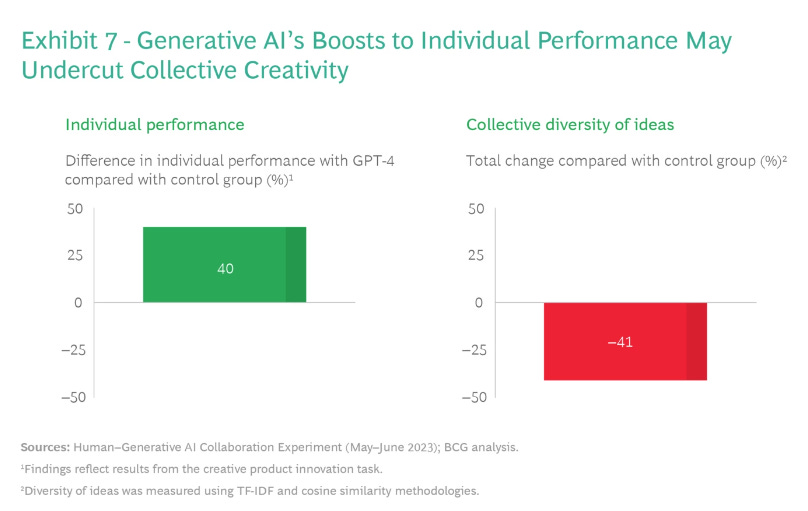

One of the interesting findings of the BCG report is that “people seem to mistrust the technology in areas where it can contribute massive value and trust it too much in areas where the technology isn’t competent… [and] the technology’s relatively uniform output can reduce a group’s diversity of thought by 41%.“

Another interesting observation is that “People who received a simple training on how GPT4 worked did significantly worse on the same tasks as the untrained group … this led us to consider whether this effect was the result of participants’ overconfidence in their own abilities to use GPT-4—precisely because they’d been trained”

The two screenshots below, from the report, illustrate how this kind of tech, even in its current baseline product state, can significantly improve the baseline of the performance of a group of workers, but most likely at the cost of stifling group creativity.